miniHPC: SMALL BUT MODERN HPC

Funding: University of Basel

Duration: 15.12.2016-Present

Project Summary

miniHPC is a small high-performance computing (HPC) cluster. The purpose of designing and purchasing miniHPC is two-fold:

(1) to offer a platform for teaching parallel programming to students in order to achieve high performance computations and

(2) to have a fully-controlled experimental platform for conducting leading-edge scientific investigations in HPC.

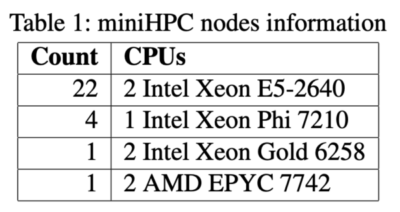

The miniHPC has a peak performance of 28.9 double-precision TFLOP/s. The miniHPC has 4 types of nodes (see Table 1), Intel Xeon E5-2640 nodes, Intel Xeon Phi Knights Landing (KNL) 7210 nodes, Intel Xeon Gold 6258 nodes, and AMD EPYC 7742 nodes (see Table 2). The Intel Xeon E5-2640 nodes consist of 22 computing nodes, 1 login node, and 1 node for storage. The Intel Xeon Phi nodes consist of 4 computing nodes. The Intel Xeon Gold 6258 nodes and the AMD EPYC 7742 nodes are both computing nodes.

- http://ark.intel.com/products/92984/Intel-Xeon-Processor- E5-2640-v4-25M-Cache-2_40-GHz

- http://ark.intel.com/products/94033/Intel-Xeon-Phi- Processor-7210-16GB-1_30-GHz-64-core

- https://ark.intel.com/content/www/us/en/ark/products/199350/intel-xeon-gold-6258r-processor-38-5m-cache-2-70-ghz.html

- https://www.gigabyte.com/Enterprise/GPU-Server/G482-Z52-rev-100#Specifications

Node Intel Xeon Gold 6258R contains 2 x NVidia A100-PCIE-40GB GPUs ( https://images.nvidia.com/data-center/a100/a100-datasheet.pdf ).

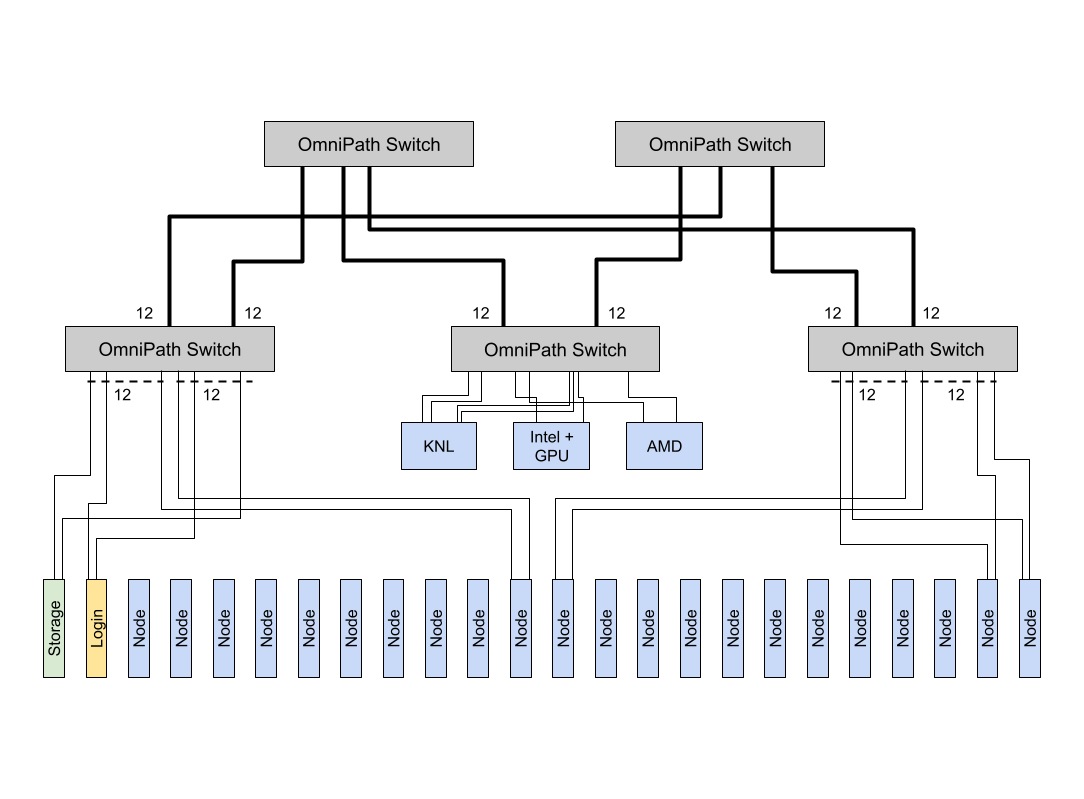

All nodes are interconnected through two different types of interconnection networks. The first network is an Ethernet network with 10 Gbit / s speed, reserved for users and administrators access. The second network is the fastest network, an Intel Omni-Path network with 100 Gbit / s speed, reserved for the high-speed communication between the computing nodes. The topology of this second network interconnects the 30 (24 Intel Xeon E5-2640, 4 Intel Xeon Phi or KNL, and 1 Xeon Gold 6258R, 1 AMD EPYC 7742) of the miniHPC cluster via a two-level fat-tree topology.

Graphical illustration of the miniHPC two-level fat-tree topology. Number of nodes: 30 (24 Intel Xeon E5-2640, 4 Intel Xeon Phi or KNL, and 1 Xeon Gold 6258R, 1 AMD EPYC 7742). Number of switches: 5. Number of links: 196.